Automate your materials planning and production scheduling today.

Bottleneck helps CPG brands and manufacturers manage their supply chain, forecast sales, track inventory, schedule production and collaborate internally and externally and so much more.

Modules to meet your every need

Your team wastes dozens of hours every week searching for inventory, forecasting materials needs, tracking down shipments and scheduling production. Bottleneck makes it easy for users to manage their supply chain, operations and finance from an intuitive, cloud-based, single source of truth.

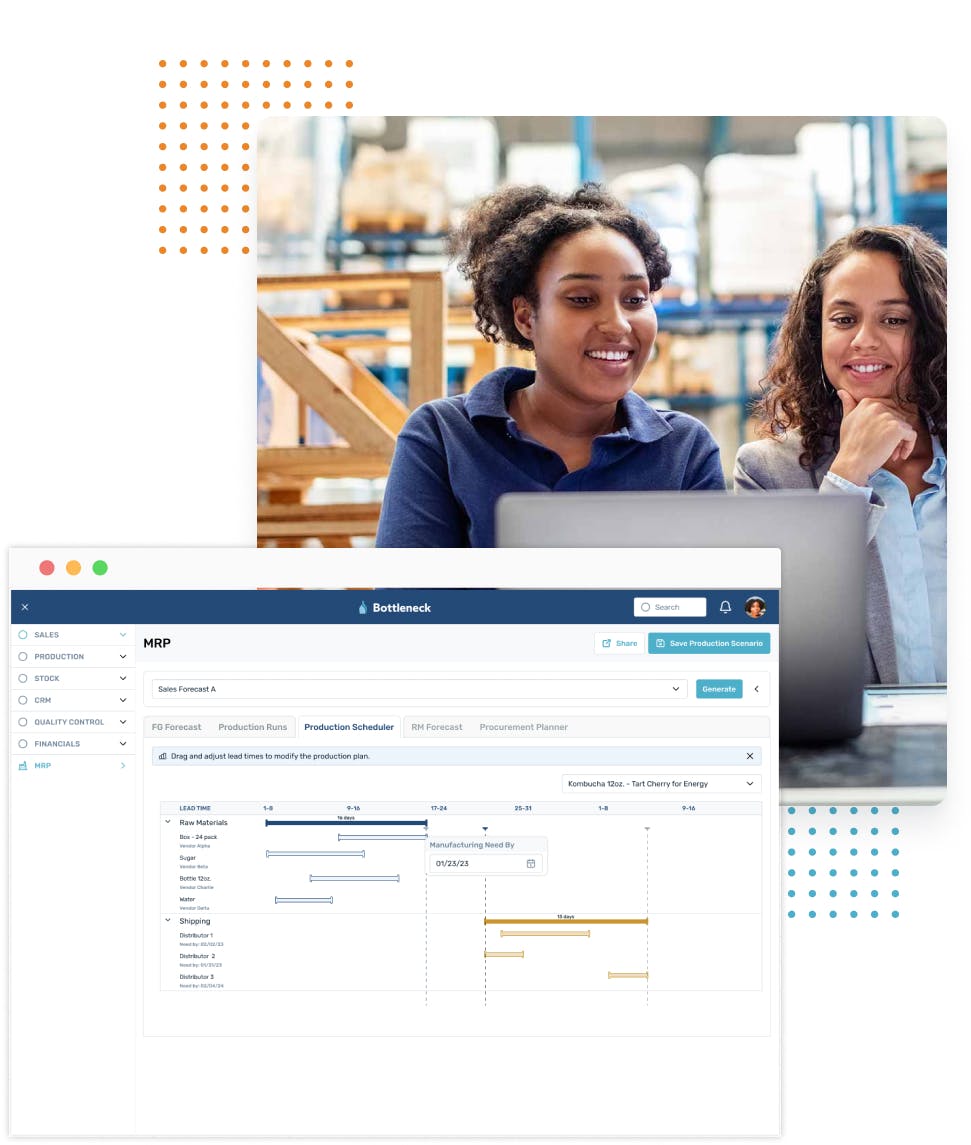

Scheduling

Automate production planning

Quality Control

Manage audits digitally

Automation

AI-powered tools reduce errors

We bring AI to the CPG supply Chain

Bottleneck is on a mission to build collaborative software for the CPG industry with a suite of modules and embedded AI for brands, manufacturers, suppliers and vendors.

Enhanced Productivity

Optimize inventory levels and production schedules

Automated Processes

Eliminate manual work and reduce unnecessary time

Advanced Analytics

Synthesize data instantly to make faster, better decisions

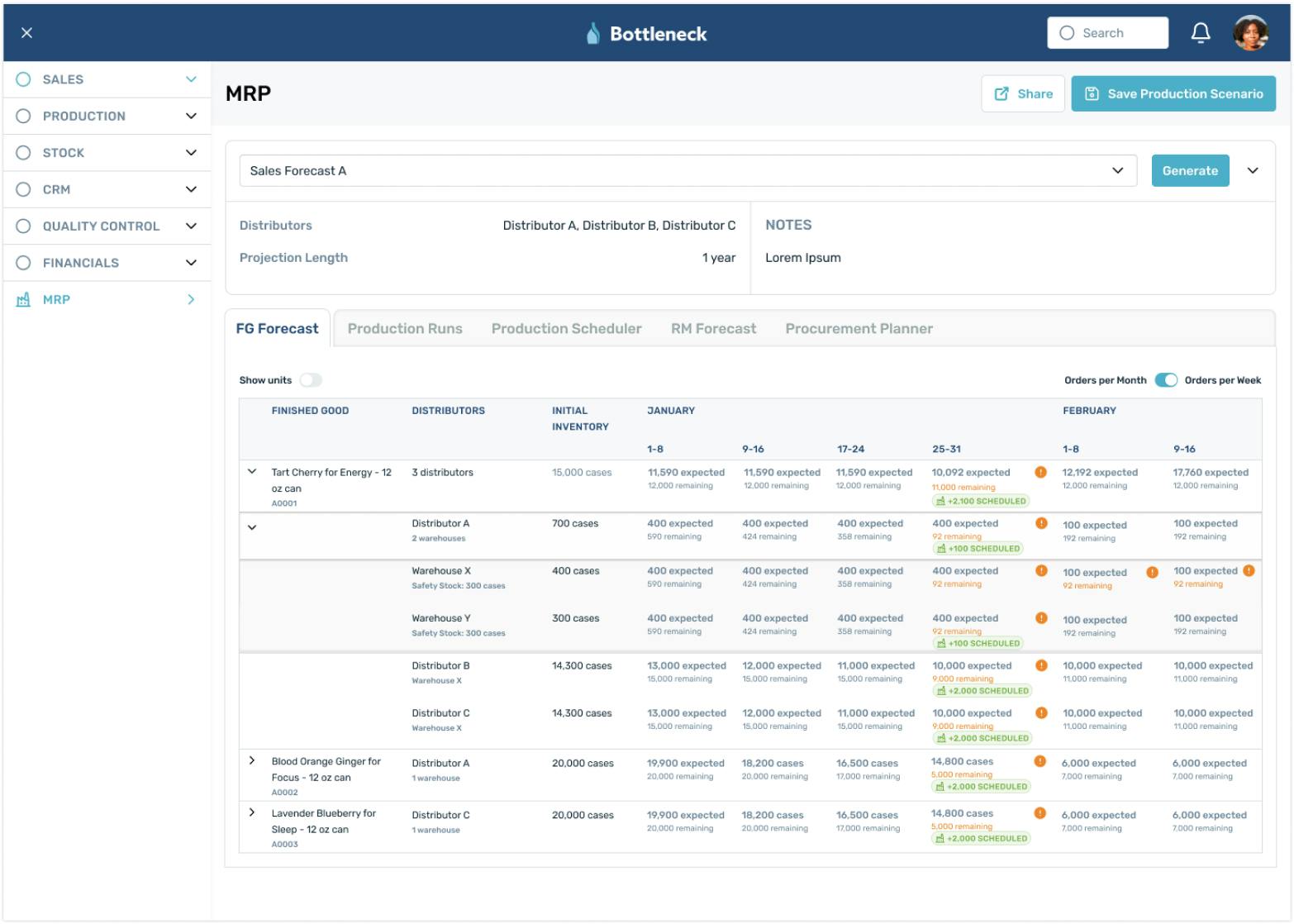

We redefine materials requirement planning using automation

Automated Forecasting

Streamline your sales, operations and finance with AI-driven predictions.

Actionable Insights

Empower your decisions with data-driven recommendations and interconnected modules.

Real-Time Notifications

Stay ahead of disruptions with instant alerts and notifications for your team and customers.

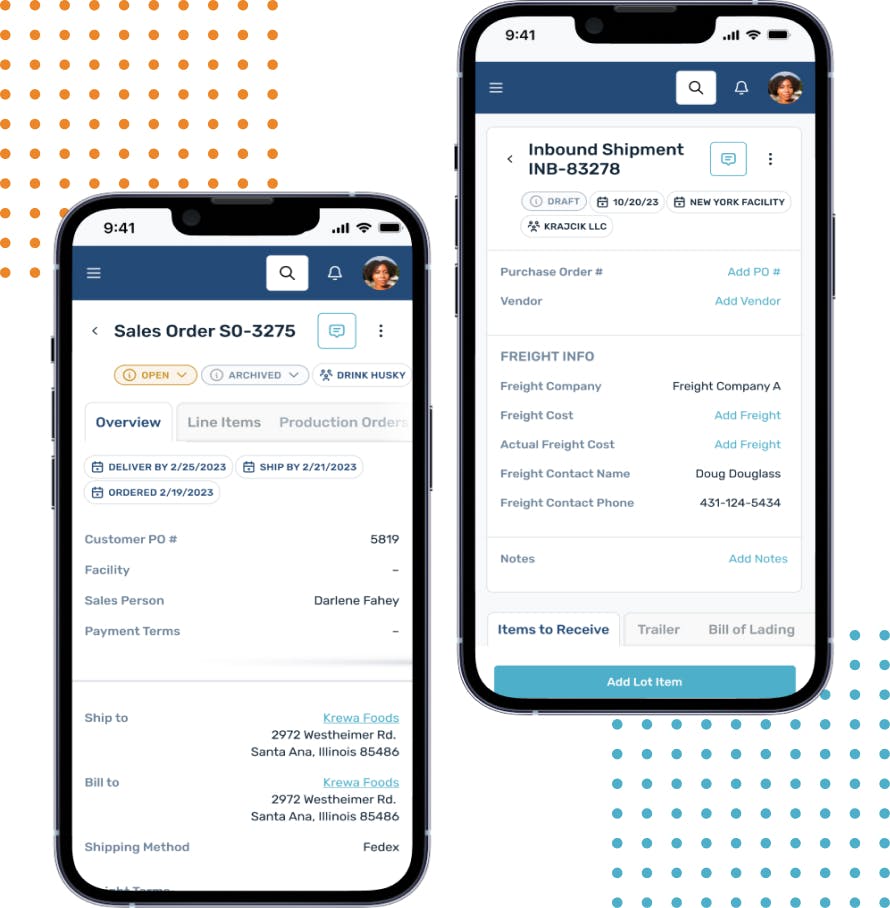

Manage supply chains anywhere

Crisp, clean UX and mobile functionality

Bottleneck’s modern UI and mobile functionality allows you to focus on your supply chain operations anywhere. Bottleneck helps businesses of all shapes and sizes.

- Manage every workflow with desktop or mobile views

- Filter views according to your business segment or job

- Manage shop floor operations with ease anywhere, anytime

- Get notifications when you’re on the road or working remotely

Bottleneck features interconnected modules

CRM

Inventory

Production

MRP

Digital QC

Sales

Reports

Dashboard

Messaging

Alerts

Financial

Security

Choose the right plan for your team

- Customers & Vendor CSM

- Sales

- Procurement

- Inventory

- Warehouse Management

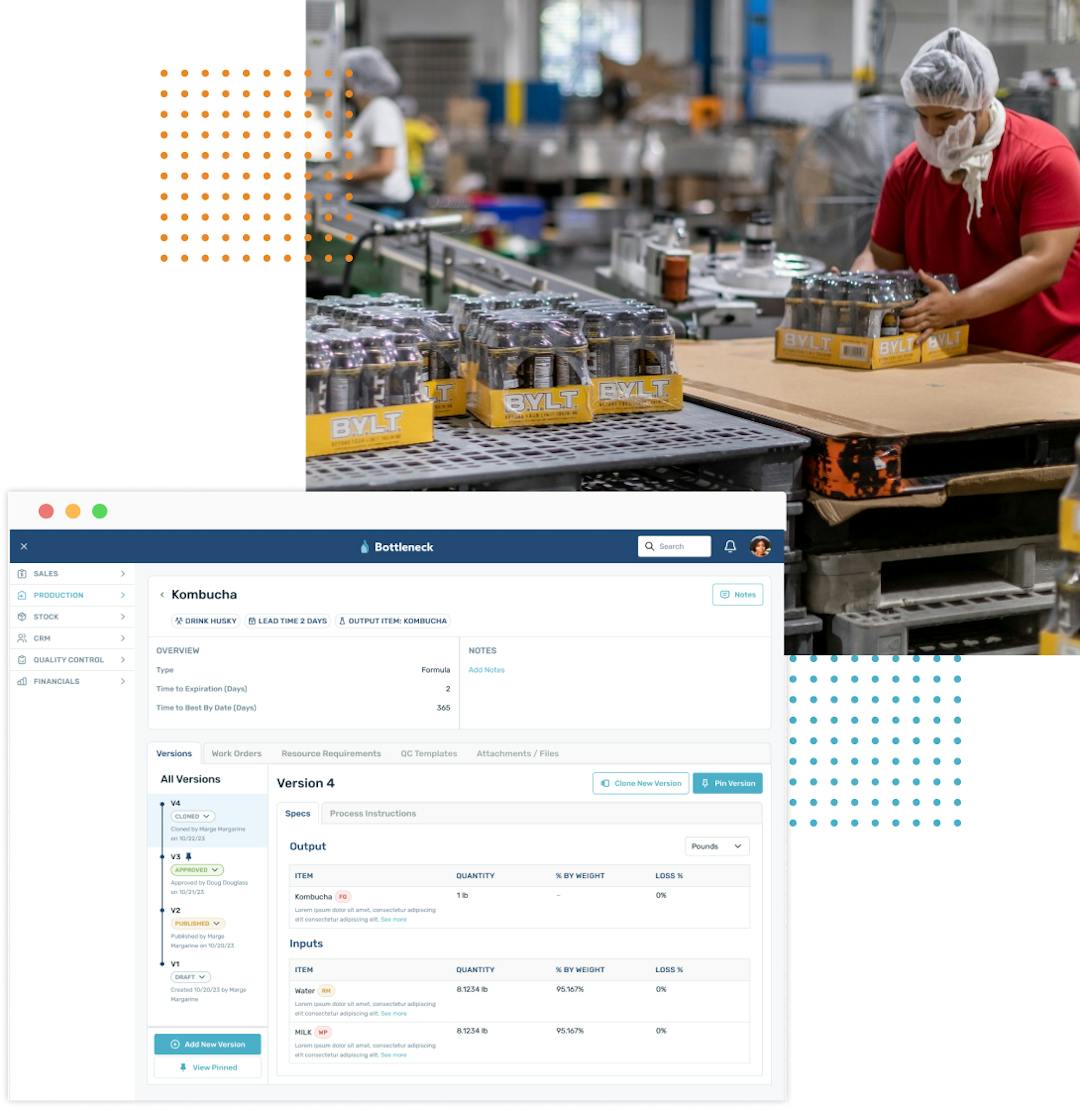

- Formulas & Bill of Materials

- Production Planning & Execution

- Materials Resources Planning (MRP)

- Quality Control

- Messaging

- Advanced File Management

- Advanced Collaboration Suite

- Additional enterprise Integrations

- Custom reports and dashboards

- Custom features & add-ons

- Extended onboarding & training

- Sales

- Procurement

- Inventory

- Warehouse Management

- Formulas & Bill of Materials

- Materials Requirement Planning

Get started today with our AI-powered MRP module

Use AI to improve materials planning & procurement.

We redefine modern materials and production planning with dashboards to help your team make better decisions and reduce COGS.